LLM Council

A “shadow board” of AI models that pressure-tests my thinking.

Most AI tools try to be helpful. LLM Council is designed to be usefully critical. It runs a draft through multiple models with distinct roles, surfaces what sounds vague or inflated, and produces a cleaner final output—plus transparent “show your work” reasoning so users can trust the edits.

Role

Solo Builder

Duration

1 day

Tools

Replit, ChatGPT

inspiration

I was an early ChatGPT adopter while studying data science at UChicago, but for a long time, it was the only LLM I tried and adopted.

However, during the first summer of the MMM program at Northwestern, a Segal Design workshop on agentic AI for PM productivity showed me how powerful it is to iterate using multiple models side-by-side.

Around the same time, I kept coming back to the idea that we should use “adversarial” intelligences to check one another (a perspective I’d heard echoed by computational social scientist James Evans).

When I discovered Andrej Karpathy’s LLM Council repo, I decided to build a simpler web-based version so non-technical users could get the benefits of a multi-LLM council without complex setup.

prototype

This was my first vibe coding build in Replit. I focused on three models I personally use the most—ChatGPT, Gemini, and Claude—and designed the experience around a simple pattern: generate, critique, revise.

The goal was to create a flow that makes feedback immediately actionable by structuring responses into distinct perspectives and a clean synthesis step.

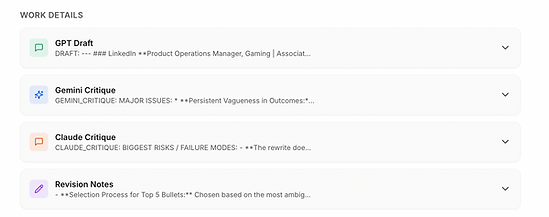

To keep the critique transparent and actionable, the Council organizes feedback into a review stack:

-

GPT Draft: generates an initial rewrite

-

Gemini Critique: flags vagueness, missing baselines, and attribution gaps

-

Claude Critique: stress-tests credibility (buzzwords, weak claims, failure modes)

-

Revision Notes: selects the “top 5” most skeptical bullets and consolidates fixes into final edits

For this demo, I will be using the following prompt as a use case.

Be a skeptical recruiter. What sounds vague, inflated, or unproven in my resume experience section? Rewrite the top 5 bullets with stronger evidence and tighter verbs.

For the result, also provide Cleaner, more credible bullets and a clearer ‘why you’ narrative.

outcome

To make the experience feel polished and transparent, I added a loading state that tells users what’s happening behind the scenes while the council generates and critiques.

Once complete, the user can review a side-by-side critique between the models, compare points of disagreement, and walk away with tangible outputs:

-

sharper, more credible resume bullets

-

a clearer narrative the candidate can reuse in interviews and networking

Scroll to the bottom of this writeup to see the sample output from this test run.

learnings

-

Prototyping has real cost constraints

-

Replit was a great way to move fast, but I hit a practical limitation immediately: I maxed out my usage credits early and had to upgrade. It was a good reminder that “vibe coding” still has real compute/API economics—especially when a single user action fans out into multiple model calls. In the next iteration, I’d add basic cost controls (rate limits, caching, and lighter “preview” runs) so the experience stays affordable and predictable.

-

-

Model orchestration matters more than the models themselves

-

I initially hard-coded the critique sequence (with Claude always last), but the output made it obvious that different models shine in different roles depending on the prompt. Next, I want to make the council adaptive: define what each model is “best at” (e.g., logic/evidence check, tone/credibility red-team, structured rewrite), then use a lightweight routing agent to choose the order—and even which critiques to run—based on the user’s use case. That would make the council feel less like three parallel opinions and more like an intentional review workflow.

-

Below is a sample of what the the LLM Council outputted across each step.

Sampled ChatGPT initial draft: ASSUMPTIONS: The candidate welcomes feedback for tightening claims into measurable, results-driven language. There’s room to reduce buzzwords and better attribute credit to tools or stakeholders involved in achievements. The goal is emphasizing impact without overinflating ambiguous outcomes. --- RISKY AREAS / UNCERTAINTIES: Phrases like “partnered with Engineering” or “led audits” could benefit from numerical clarity—how many audits or specific metrics of collaboration? “20K weekly user interactions” and “+1% annualized WAU gain” are compelling numbers but lack broader context—what’s the baseline relative to LinkedIn’s operations? The shift to subscription pricing is cited as effective but doesn’t quantify success with revenue comparisons or adoption stats. Terms such as “defined roadmap,” “elevated user experience,” and “synthesized insights” might come across as jargon or unsubstantiated without evidence of outcomes. Multilingual rollout contribution is somewhat blurred—was the primary role operational guidance, tech execution support, or localization strategy? Clarifying ownership strengthens credibility.

Sampled Gemini Critique: SUGGESTED FIXES: Prioritize Quantifiable Impact: Review every bullet and ask: "Can this be a number?" If not, ask: "What downstream number did this enable?" For example, instead of "aided decision-making," state "influenced C-suite decision resulting in [X% budget allocation / Y product launch]." Explicitly State Candidate's Role: For collaborative efforts, use phrases that clarify individual contribution (e.g., "Led a cross-functional team to...", "My analysis informed...", "Spearheaded the design and implementation of..."). Strengthen Action-Result Connection: Ensure the verb logically leads to the numerical outcome. If your action *informed* a change that yielded results, phrase it that way. If your action *was* the change, make that explicit. *Example (LinkedIn 1):* "Directed mixed-methods research, informing and driving design adjustments for multilingual profile features that cut support cases 59% and increased weekly user interactions by 20,000 (from a baseline of X)." *Example (LinkedIn 4):* "Led accessibility audits and informed UX design changes for LinkedIn gaming tools, resulting in a 42% increase in member sentiment on flagship games, validated by updated testing protocols." Add Context to Metrics: For every number, consider adding scale or baseline information. For LinkedIn's WAU, "1% WAU gain on a product with 10M+ users" gives immediate impact. Replace Subjective Terms: Change "winning" to "successful" or "high-performing." Replace "stronger alignment" with quantifiable evidence of improved value (e.g., "resulting in a 15% increase in customer satisfaction related to pricing"). Leverage Original Specifics: Re-incorporate specific numbers like "11 language rollouts" where they add tangible detail and were omitted in the draft.

Sampled Claude Critique: BIGGEST RISKS / FAILURE MODES: The rewrite doesn't actually address vagueness—it adds MORE fluff. The question explicitly asks for a skeptical recruiter's perspective on what sounds inflated/unproven, but the draft just rewrites bullets with *different* vague language ("targeted research," "rigorous accessibility audits," "strategic touchpoint improvements"). A recruiter would still flag these as buzzwords. Mitigation: Actually answer the question first—list what's skeptical/weak in the ORIGINAL bullets before rewriting. No actual critique section provided. The user asked to "be a skeptical recruiter" and identify problems, but the draft jumps straight to rewrites. This misses 50% of the deliverable. Mitigation: Add a "RECRUITER RED FLAGS" section before the rewrites that calls out specific weasel words, unquantified claims, and passive voice. The rewrites often WEAKEN the evidence. Example: Original says "reduced support cases 59%"—clear metric. Rewrite says "cutting support cases by 59%...through design adjustments"—the added phrase dilutes impact and raises questions (which adjustments? were YOU the designer?). Mitigation: Only add context that strengthens attribution, not vague explanations. Missing the "top 5" constraint. The draft rewrites ~13 bullets across 3 companies. The user specifically asked for the top 5 weakest bullets. Mitigation: Rank bullets by weakness level and focus only on the 5 most problematic.